Last week I attended a

meetup on API testing with Mark Winteringham. In it, he talked through some HTTP and REST basics, introduced us to

Postman by making requests against his

Restful Booker bed and

breakfast application, and encouraged us to enter the

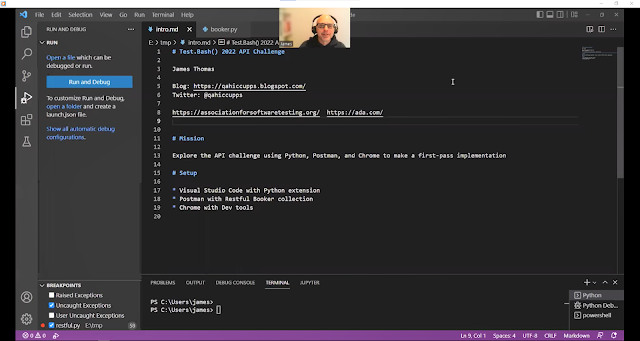

Test.bash() 2022 API Challenge

which was about to close.

The challenge is to make a 20-minute video for use at the Ministry of Testing's October Test.bash() showing the use of automation to check that it's possible to create a room using the Restful Booker API.

I talk and write about exploring with automation a lot (next time is 14th October 2022, for an Association for Software Testing webinar) and I thought it might be interesting to show that I am not a great developer and spend plenty of time Googling, copy-pasting, and introducing and removing typos.

So I did and my video is now available in the Ministry of Testing Dojo. The script I hacked during the video is up in GitHub.

My code is not beautiful. But then my mission was not to write beautiful code,

it was to make a first-pass implementation with the side-aim of using features

of VS Code's Python extension. And I achieved that.

Interestingly, the challenge didn't set out how or where this code is to be used. That makes a difference to what I might do next. If I needed to plumb it into some existing project I could treat my attempt as a spike and code it more cleanly in whatever style the project uses.

If I wanted to re-use pieces in some more exhaustive coverge of the API that I was building, I might factor out some helper functions such as getting the authentication token and making a client session object.If I wanted to exploit automation to explore the room API I might leave it as it is for now, and hack new bits in as I had questions that I'd like to answer.

Let's take that last example. What kind of questions might I have that this crummy tool could help with? Well, I wondered whether the application could cope with multiple creation requests.

To begin with, I modified my script to loop and create 10 rooms then loop and check that the server returns them all. This is multiple_rooms.py and you can see that it's pretty similar to the original.

Next I wondered whether I could create rooms in parallel rather than sequentially. I had to hack the code about a little to accomplish this, although you can see that the bones of it are still create a token, create rooms, check rooms: multiple_rooms_parallel.py.

To facilitate the parallelism, I had to write a function to create a room (make_a_room) which is called on a list of room names.

While I was playing with that function I noticed that checking for created rooms seemed to be non-deterministic. Sometimes all the rooms would be found, other times only some, and occasionally none. Using the web interface to Restful Booker it appeared that all of them were present so I traced the script execution in the Python debugger and found that the server did not always return all of the rooms the script had created. After a short delay, though, the list was fully-populated.

To expose what looks like an interesting issue more clearly (maybe for a bug report and fix-checker) I made a second function (check_rooms_exist) which fetches the list of rooms the server claims are there and checks against the list of rooms the test code thinks it created. I removed the assertions in this function to make success and fail easier to see in bulk.

The script calls this checking function twice. Here's a typical run:

---- attempt 1 ---- 2022-10-02T09:37:02.953068__884680 False 2022-10-02T09:37:02.953068__236820 True 2022-10-02T09:37:02.953068__774290 False ---- attempt 2 ---- 2022-10-02T09:37:02.953068__884680 True 2022-10-02T09:37:02.953068__236820 True 2022-10-02T09:37:02.953068__774290 True

The first column is the room names composed of a timestamp (shared by all names in a run) and a random number (one per name in the run). The second column is whether or not the name exists in the list of rooms returned by the server.

In attempt 1, the first time check_rooms_exist is called, only one room apparently exists (True), although during room creation calls the server said that all three were created.

Then, in attempt 2 a second or so later, when the function is called again, the server says all of them are there.

Is this a problem? Maybe yes. Or no. From a user perspective perhaps it's good enough: when the room they just created occasionally doesn't appear in the list of rooms, refreshing the page is likely to show it.

But the script could now become a tool for testing tolerance of the observed behaviour. Let's say we're not prepared for the user to need to refresh twice and we assume this would take two seconds. Well, we can modify the script to create ever larger numbers of rooms and put a two-second delay between the two attempts to retrieve the list of rooms.

When we start to see False in the attempt 2 list, we will have an idea about the load required to go past acceptable performance and can decide how often it's likely to be seen in practice and whether we need to attempt to fix it.

We could experiment in other dimensions too. For example, would longer names, or descriptions, or more metadata make a difference to the delay? Would duplicate room names be accepted in parallel? What happens if we try to delete rooms before the server will admit that they are present?

The code for this parallel script is still reasonably ugly. But that's OK, it's an experimental tool not a production artefact. It's automation that can help us to explore, to ask questions, and then to answer them. I make and use tools like this all the time.

Highlighting: pinetools