What feels like a zillion years ago I wrote a few pieces for the Ministry of Testing's Testing Planet newspaper. Understandably, they've since mostly been replaced with much better stuff on the Dojo but MoT have kindly given me permission to re-run them here.

--00--

You want to hone your skills, to develop, consolidate and extend them. You want to learn new skills that will help you do what you do more effectively, efficiently, efficaciously, or just effing better. You want to find ways to learn new skills more easily, and you know that learning skills is itself a skill, to be improved by practice. You want to grow the skill that tells you what skills you should seek out in order to accomplish the next challenge. You want to find ways to communicate skills to your colleagues and the wider testing community. You know skills are important. You need skills, as the Beastie Boys said, to pay the bills.

So what you probably don't want is to be told that increased skill can ultimately lead to an increased reliance on luck.

And if that's the case you should definitely not read Michael Mauboussin's The Paradox of Skill: Why Greater Skill Leads to More Luck, a white paper in which he says that, in any environment where there are two forces in competition, both striving to improve their skills, there will be a point when skill sets cancel each other out and outcomes will be more dependent on fortune than facility. He cites a baseball example in which Major League batting averages have decreased since 1941 even though by parallel with other sports and their records (such as athletics and the time to run 100 metres) you'd expect the players, techniques, equipment, coaching and so on have all improved and hence also averages.

The reason why they haven't, he concludes, is that batters don't bat in isolation and as their skills improve, so do the skills of the pitchers. It's a process where improvements on either side cancel out improvements on the other. In this scenario, chance events can have a disproportionate effect.

Much as I'd never want to roll out a conflict analogy to describe the relationship between Dev and Test *cough* it's easy to see this kind of arms race in software development. At a simple level: New code is produced. Issues are reported. Issues are fixed. Old code is wrapped in test. New issues become harder to find. Something changes. New issues are found. And so on. The key is what something we choose to change.

We'll often try to provoke new issues by introducing novelty - new test vectors, data, approaches and of course skills. But we can also echo Mauboussin and try to invoke chance interactions in areas we already know and understand to break through the status quo. For example, we might use time and/or repetition to increase our chances of encountering low-probability events. You can't control every parameter of your test environment so running the same tests over and over again will naturally be testing in different environments (while a virus checker is scanning the disk or an update daemon is reinstalling some services, or summer time starts etc). Or we could operate in a deliberately messy way during otherwise sympathetic testing, opening the way for other events to impinge perhaps by re-using a test environment from a previous round of test. James Bach likes to galumph through a product and then there are approaches like fuzzing and other deliberate uses of randomness in interactions with, or data for, our application.

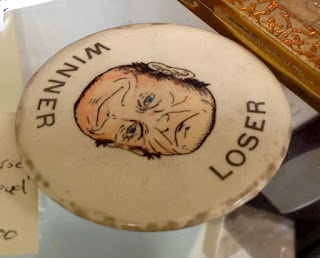

Of course, there's still a skill at play here: choosing the most productive way to spend time to simulate chance. But if you can't decide on that you could always toss a coin.

You've got skills, but still you know you want more skills. Skills for testing, naturally, for the tools you use, for choosing which tools to use, for the domain you work in, the kind of product you work on, the environment your product is deployed in, for working with your colleagues, for reporting to your boss, for managing your boss, for selecting a testing magazine to read, for searching for information about all of the above.

You want to hone your skills, to develop, consolidate and extend them. You want to learn new skills that will help you do what you do more effectively, efficiently, efficaciously, or just effing better. You want to find ways to learn new skills more easily, and you know that learning skills is itself a skill, to be improved by practice. You want to grow the skill that tells you what skills you should seek out in order to accomplish the next challenge. You want to find ways to communicate skills to your colleagues and the wider testing community. You know skills are important. You need skills, as the Beastie Boys said, to pay the bills.

So what you probably don't want is to be told that increased skill can ultimately lead to an increased reliance on luck.

And if that's the case you should definitely not read Michael Mauboussin's The Paradox of Skill: Why Greater Skill Leads to More Luck, a white paper in which he says that, in any environment where there are two forces in competition, both striving to improve their skills, there will be a point when skill sets cancel each other out and outcomes will be more dependent on fortune than facility. He cites a baseball example in which Major League batting averages have decreased since 1941 even though by parallel with other sports and their records (such as athletics and the time to run 100 metres) you'd expect the players, techniques, equipment, coaching and so on have all improved and hence also averages.

The reason why they haven't, he concludes, is that batters don't bat in isolation and as their skills improve, so do the skills of the pitchers. It's a process where improvements on either side cancel out improvements on the other. In this scenario, chance events can have a disproportionate effect.

Much as I'd never want to roll out a conflict analogy to describe the relationship between Dev and Test *cough* it's easy to see this kind of arms race in software development. At a simple level: New code is produced. Issues are reported. Issues are fixed. Old code is wrapped in test. New issues become harder to find. Something changes. New issues are found. And so on. The key is what something we choose to change.

We'll often try to provoke new issues by introducing novelty - new test vectors, data, approaches and of course skills. But we can also echo Mauboussin and try to invoke chance interactions in areas we already know and understand to break through the status quo. For example, we might use time and/or repetition to increase our chances of encountering low-probability events. You can't control every parameter of your test environment so running the same tests over and over again will naturally be testing in different environments (while a virus checker is scanning the disk or an update daemon is reinstalling some services, or summer time starts etc). Or we could operate in a deliberately messy way during otherwise sympathetic testing, opening the way for other events to impinge perhaps by re-using a test environment from a previous round of test. James Bach likes to galumph through a product and then there are approaches like fuzzing and other deliberate uses of randomness in interactions with, or data for, our application.

Of course, there's still a skill at play here: choosing the most productive way to spend time to simulate chance. But if you can't decide on that you could always toss a coin.

Image: https://flic.kr/p/edbAgc